Dramaqueen

Member

Many people confuse 4K with HDR, but these two characteristics of an image have nothing to do with it. Indeed, 4K is a definition while HDR corresponds to an image dynamic. Didn't you understand what I just wrote? It does not matter because I will explain everything to you, in the most simple of the world, so that you will soon be able to spread your science in the field!

4K

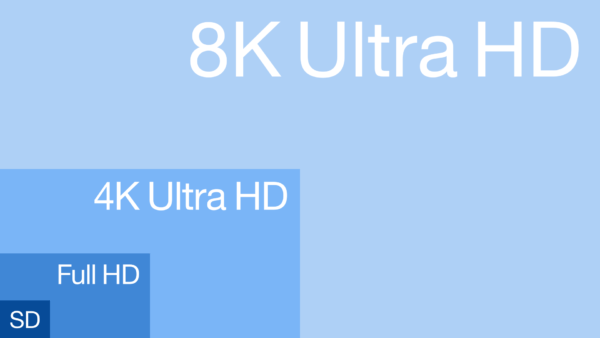

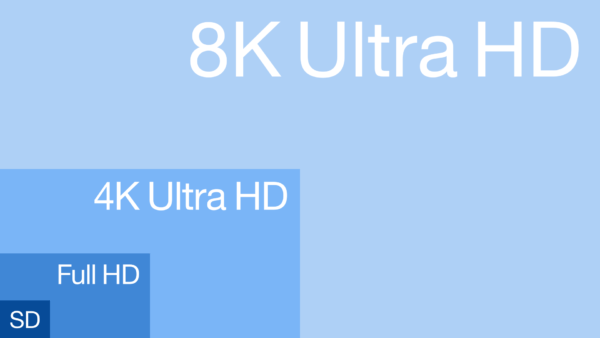

4K is a definition, that is to say a number of pixels. To be precise, 4K has 4096 pixels per line on 2160 lines, which corresponds to 8 847 360 pixels in all, or in other words about 8.8 Mega pixels. The "K" in the term "4K" means 1000, so 4K means 4000 because the lines in 4K are about 4000 pixels.

4K is often confused with ultra HD, but it's not quite the same. Indeed, an Ultra HD image has only 3840 pixels per line instead of 4096 for 4K. In terms of number of pixels, Ultra HD is made up of around 8.3 Mega pixels, which is therefore slightly less than 4K. But the biggest difference lies in the format since Ultra HD is in 16/9 format (3840/2160 = 16/9) while 4K is closer to a 17/9 ratio. 4K is therefore not a consumer definition, for example television screens are in 16: 9 format, strictly speaking we should therefore rather speak of an Ultra HD definition in this case. But for the sake of simplification the two terms have been confused.

HDR

The acronym HDR stands for High Dynamic Range, i.e. images with high dynamic range. The notion of dynamics supposes a greater difference between the luminance of the light peaks and that of the deepest black compared to SDR (Standard Dynamic Range). For example, for a television screen, the film and television industry recommends around 120 nits for light peaks in SDR, while in HDR it can rise above 1000 nits, so there is almost a factor 10 in between! HDR images are therefore much more realistic since in everyday life we regularly perceive luminances above 10,000 nits.

But to better understand, we must be able to understand what a luminance is. The unit of the latter is the candela per square meter (cd / m²), which corresponds to nit (1 cd / m² = 1 nit). A candela is approximately the light intensity emitted by a candle, so 1 cd / m² is the luminance obtained if you have one candle per m². To return to the example of the television screen, a light peak of 120 cd / m² produces a little the same luminance as if you had arranged 120 candles on a surface of 1 m². Imagine the brightness corresponding to a luminous peak of 1000 cd / m² in HDR! Everything that shines (lighting, flame, sun, etc.) therefore becomes much more realistic thanks to HDR.

What about video projection? It is less spectacular than for television because in this case we are no longer dealing with a factor of 10, but only with 2. In fact, the luminance standard in SDR for light peaks is only 48 cd / m², and goes to 100 cd / m² in HDR. The difference is therefore less significant, but is not without interest.

But HDR is not just a question of increasing the luminance of light peaks, it is indeed the whole tonal distribution that changes, the idea being to get closer to the visual perception of a human in his life daily. HDR therefore also allows in theory a better readability of dark areas.

Things would be too simple if we stopped there, so let's start addressing dynamic HDR. In video there is indeed static HDR, with for example HDR10, but also dynamic HDR with Dolby Vision or HDR10 +. What is it about ? For static HDR the same electro-optical transfer function * is used throughout the video, while for dynamic HDR it changes on the fly according to the metadata contained in the video file. This results in an even more realistic image, you no longer have the feeling of watching a video but of living it!

4K

4K is a definition, that is to say a number of pixels. To be precise, 4K has 4096 pixels per line on 2160 lines, which corresponds to 8 847 360 pixels in all, or in other words about 8.8 Mega pixels. The "K" in the term "4K" means 1000, so 4K means 4000 because the lines in 4K are about 4000 pixels.

4K is often confused with ultra HD, but it's not quite the same. Indeed, an Ultra HD image has only 3840 pixels per line instead of 4096 for 4K. In terms of number of pixels, Ultra HD is made up of around 8.3 Mega pixels, which is therefore slightly less than 4K. But the biggest difference lies in the format since Ultra HD is in 16/9 format (3840/2160 = 16/9) while 4K is closer to a 17/9 ratio. 4K is therefore not a consumer definition, for example television screens are in 16: 9 format, strictly speaking we should therefore rather speak of an Ultra HD definition in this case. But for the sake of simplification the two terms have been confused.

HDR

The acronym HDR stands for High Dynamic Range, i.e. images with high dynamic range. The notion of dynamics supposes a greater difference between the luminance of the light peaks and that of the deepest black compared to SDR (Standard Dynamic Range). For example, for a television screen, the film and television industry recommends around 120 nits for light peaks in SDR, while in HDR it can rise above 1000 nits, so there is almost a factor 10 in between! HDR images are therefore much more realistic since in everyday life we regularly perceive luminances above 10,000 nits.

But to better understand, we must be able to understand what a luminance is. The unit of the latter is the candela per square meter (cd / m²), which corresponds to nit (1 cd / m² = 1 nit). A candela is approximately the light intensity emitted by a candle, so 1 cd / m² is the luminance obtained if you have one candle per m². To return to the example of the television screen, a light peak of 120 cd / m² produces a little the same luminance as if you had arranged 120 candles on a surface of 1 m². Imagine the brightness corresponding to a luminous peak of 1000 cd / m² in HDR! Everything that shines (lighting, flame, sun, etc.) therefore becomes much more realistic thanks to HDR.

What about video projection? It is less spectacular than for television because in this case we are no longer dealing with a factor of 10, but only with 2. In fact, the luminance standard in SDR for light peaks is only 48 cd / m², and goes to 100 cd / m² in HDR. The difference is therefore less significant, but is not without interest.

But HDR is not just a question of increasing the luminance of light peaks, it is indeed the whole tonal distribution that changes, the idea being to get closer to the visual perception of a human in his life daily. HDR therefore also allows in theory a better readability of dark areas.

Things would be too simple if we stopped there, so let's start addressing dynamic HDR. In video there is indeed static HDR, with for example HDR10, but also dynamic HDR with Dolby Vision or HDR10 +. What is it about ? For static HDR the same electro-optical transfer function * is used throughout the video, while for dynamic HDR it changes on the fly according to the metadata contained in the video file. This results in an even more realistic image, you no longer have the feeling of watching a video but of living it!