Dramaqueen

Member

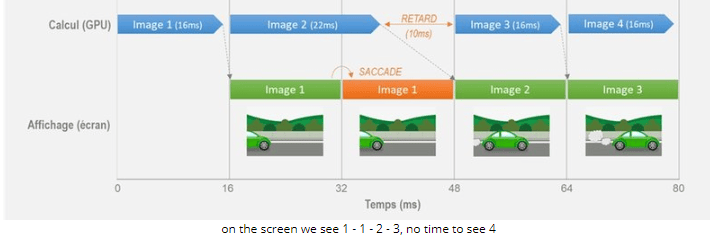

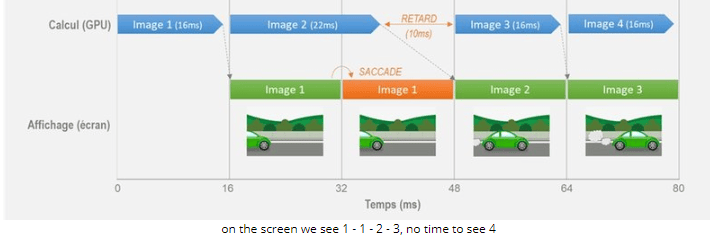

It is to avoid this particularly unpleasant phenomenon that the V-Sync was designed, (for vertical synchronization, relatively to the direction of scanning). Naturally, it was first desired to settle things on the source side rather than changing the standards of the monitor and TV industry. At the source to settle on the screen, and not vice versa. A technology has therefore been created that simply retains each image until the next screen refresh cycle before sending the image. Feasible with a simple clock fixed on the screen refresh (adjustable by the user in PC because of the multiplicity of monitors or preset in console because invariable in TV), this nevertheless creates micro-jerks and delay.

How does VRR work?

Recall here that the principle of fixed refresh rate on our monitors and TV is an old heritage of the era of cathode ray tubes, and their necessary compatibility with electrical distribution networks. Hence the 50Hz screens in France due to the EDF network and 60Hz in the United States for example. However, it has already been a few years, especially since the advent of digital technology and LCD screens in fact, whose technology makes it possible to retain an image on the screen without refreshing it (unlike cathode ray tubes), which the screens know how to take care of. different input frequencies. But no one had previously considered the possibility of changing this refresh rate "on the fly".

It was then that, seeing in the ever-growing “gaming” industry a new source of customers, the world of TVs and monitors decided to break out of its outdated shackles.

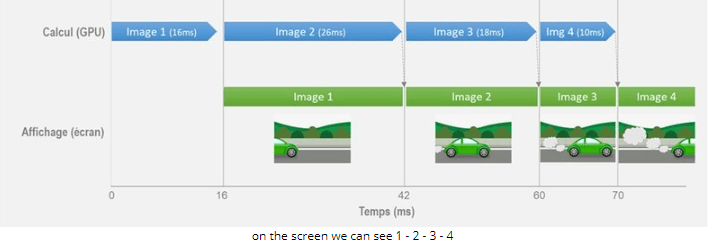

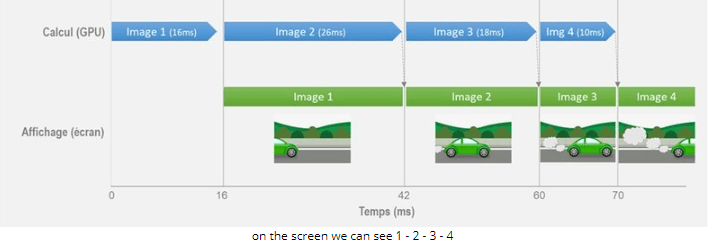

Finally comes the VRR, for Variable Refresh Rate (FRF in good François), gamer friendly technology, or almost. Remember the principle illustrated above: “at the source of being wedged on the screen”. So 2017… It is now possible to have the source and display communicate together, so that the latter does not refresh the display until the source orders it, so as soon as an image is ready. What is the result? No more desynchronization possible. More “tearing”, more “stuttering”, for a greatly improved impression of fluidity, and a greatly reduced delay.

What are the Benefits of VRR

Besides the synchronization faults that techno refers to as bad memories, the VRR also allows more varied frame rates! Indeed, if the developers aimed until today in their games for performance (in frames per second) compatible with 60Hz screens, they had no choice but to aim for 30 or 60 fps and use V-Sync to make up for the vagaries. Now we can see all kinds of intermediate image frequencies arise! Imagine that a developer, for whatever reason, cannot bring all his calculations into the 16ms required by the famous 60fps, but continuously requires 17ms. Until now, he had offered either a game with permanent tearing (unplayable), or a title crammed with micro-jerks and lags (uncomfortable), or then fell back on a mode in 30 fps ultra stable because with a huge margin. Well he can now no longer break his head and let go of the horses, because his game will run in a perfect 58 fps (17ms by calculation). We won't even see the difference with the 60 fps anymore!

Reservations about VRR

This new paradigm significantly shifts the challenge of optimization that any player expects from a developer, or at least VRR can help reduce the work required in this area. Still, it is hoped that this technology will only be used to catch code drift or temporary overloads, and that stability will remain a priority objective of developers. We also hope that the studios will maintain enough energy to achieve the best possible performance, that they will not be satisfied with what technology allows to tell us that “50 fps is already not bad, it's always better than 30 ”where they would have chased the superfluous before and managed to release a game in 60 fps.

- Micro-jerks, or “stutter” in English, translates in a rather colorful way by “stuttering” because each image can then be repeated more or less times than the previous one or the next one, strongly harming the impression of fluidity.

- Delay, "lag" in English, because it is the whole calculation cycle which is delayed, waiting for the previous image to be sent before starting the calculation of the next image. We are there in a other form of input lag than that frequently encountered in screen specifications. These (except cathodic) take a certain amount of time to process what they receive before starting scanning. These two forms of delay are therefore unfortunately indeed to be added.

How does VRR work?

Recall here that the principle of fixed refresh rate on our monitors and TV is an old heritage of the era of cathode ray tubes, and their necessary compatibility with electrical distribution networks. Hence the 50Hz screens in France due to the EDF network and 60Hz in the United States for example. However, it has already been a few years, especially since the advent of digital technology and LCD screens in fact, whose technology makes it possible to retain an image on the screen without refreshing it (unlike cathode ray tubes), which the screens know how to take care of. different input frequencies. But no one had previously considered the possibility of changing this refresh rate "on the fly".

It was then that, seeing in the ever-growing “gaming” industry a new source of customers, the world of TVs and monitors decided to break out of its outdated shackles.

Finally comes the VRR, for Variable Refresh Rate (FRF in good François), gamer friendly technology, or almost. Remember the principle illustrated above: “at the source of being wedged on the screen”. So 2017… It is now possible to have the source and display communicate together, so that the latter does not refresh the display until the source orders it, so as soon as an image is ready. What is the result? No more desynchronization possible. More “tearing”, more “stuttering”, for a greatly improved impression of fluidity, and a greatly reduced delay.

What are the Benefits of VRR

Besides the synchronization faults that techno refers to as bad memories, the VRR also allows more varied frame rates! Indeed, if the developers aimed until today in their games for performance (in frames per second) compatible with 60Hz screens, they had no choice but to aim for 30 or 60 fps and use V-Sync to make up for the vagaries. Now we can see all kinds of intermediate image frequencies arise! Imagine that a developer, for whatever reason, cannot bring all his calculations into the 16ms required by the famous 60fps, but continuously requires 17ms. Until now, he had offered either a game with permanent tearing (unplayable), or a title crammed with micro-jerks and lags (uncomfortable), or then fell back on a mode in 30 fps ultra stable because with a huge margin. Well he can now no longer break his head and let go of the horses, because his game will run in a perfect 58 fps (17ms by calculation). We won't even see the difference with the 60 fps anymore!

Reservations about VRR

This new paradigm significantly shifts the challenge of optimization that any player expects from a developer, or at least VRR can help reduce the work required in this area. Still, it is hoped that this technology will only be used to catch code drift or temporary overloads, and that stability will remain a priority objective of developers. We also hope that the studios will maintain enough energy to achieve the best possible performance, that they will not be satisfied with what technology allows to tell us that “50 fps is already not bad, it's always better than 30 ”where they would have chased the superfluous before and managed to release a game in 60 fps.